Something I’ve noticed lately in AI discourse is a ramping up of the sentiment that AI—including in its current “generative” incarnation—will address the problems of “human error” in all kinds of media.

I think the first time I really encountered this way of thinking was during the advent of “self-driving” cars. The argument went that, with machines in control, all kinds of cognitive or perceptual mistakes humans could make behind the wheel—not paying attention, not being able to see at specific angles around the car, having a dirty mirror, drifting off to sleep—would be eliminated. Ergo, self-driving cars would be safer than human-driven cars. But, years on, self-driving cars are not performing with perfect rationale or accuracy, and—absent any specific theoretical framework—it’s unclear how we’d even recognize when they were.

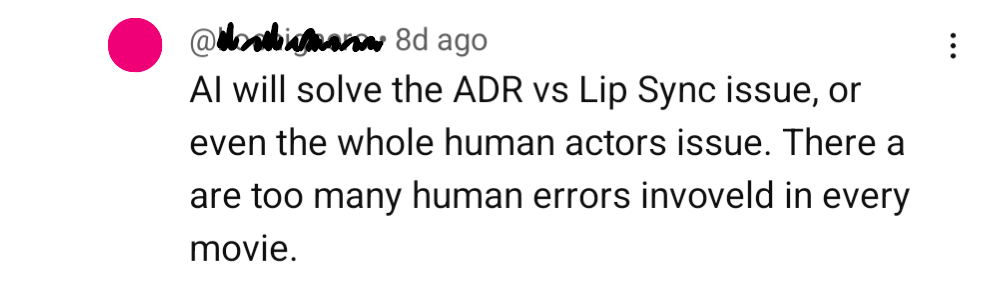

But what’s interesting to me here is not whether or not AI (or any technology) can rid us of human error. What’s interesting is what we consider human error and—for each of the numerous answers—what overarching impulse drives us to get rid of it in the first place. In the screenshot above (taken from a video about why Madame Web is such a weird movie), the central idea is “the whole ‘human actors’ issue.” It is the idea that human actors are too unpredictable, too susceptible to flaws in performance, and too inefficient to create a perfect movie. According to the commenter, that will finally be possible using AI. Absent from this line of thinking is any description of what a movie perfected by AI would be like or what—if anything at all—we might experience when we watch it.

Here’s another example, plucked from a thread that’s nominally about the capabilities of a specific AI model:

Her face doesn’t look the same at all???? How is this a “restoration” https://t.co/8ZesY0LNOV

— tina snow aegyo (@xoxogossipgita) January 10, 2024

The original poster calls this “restoration.” Why? Because it contains more detail than the frame they started with. I happened on this thread when an old colleague reposted it. I replied then with something I still believe now: that the measure of success in AI manipulations like this is how much it does, not what it does. That the value of the intervention is in how deep it goes.

I think it’s a misplaced belief that the substance of a subjective work is in its aesthetics. I think the worth of the output is reliant on that framing in examples like this, so they give a positive evaluation to random changes.

— Liam Spradlin (@iamliam) January 11, 2024

Measuring generative output this way is another symptom of the desire to transcend error, just turned in another direction. The error in this case is the low resolution of the original film, which was shot in the mid-80s, well before digital filming, let alone transmission at HD resolution, were viable technologies. The lack of detail that imparts on the screenshot is supposed to be a flaw to be overcome by technology. It doesn’t particularly matter in this framing whether the details AI adds should be accurate. We should just be dazzled by its aesthetic richness. Just don’t ask where Madonna’s hands are. Other examples in the thread are even more laughable (for example, putting bags under the eyes of the subjects in Jan van Eyck’s Arnolfini portrait).

In this context, “error” can be anything aesthetically imperfect or incomplete, any unpredictable or challenging output, any output that requires human interpretation to become complete, or any output that is open to multiple interpretations. In other words, error can be seen as subjectivity itself.

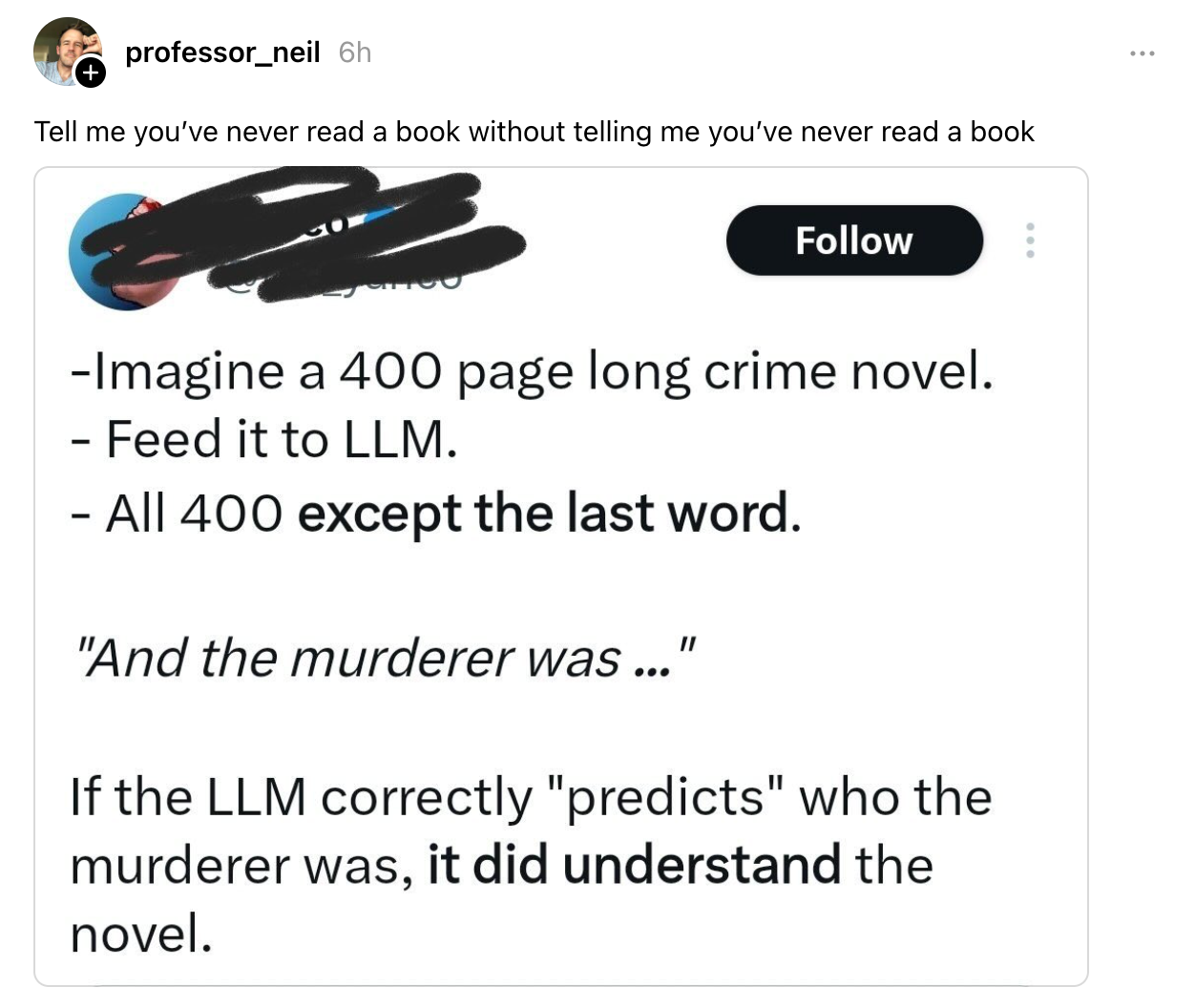

Finally, a post on Threads that caught my eye: the original poster is proposing that you could feed every word of a crime novel (except the end) into an LLM and test whether it “understands” the plot by asking it to tell you who the killer is. Leaving aside the question of what it would even mean for a computer to “understand” that kind of information, replies on Threads correctly point out that the original post frames the novel as essentially a math problem. Assuming all the other pieces are there, an entity is successful in engaging with the work if it can successfully produce the one teeny tiny little cog (a single word!) that’s missing, completely missing the point of reading (or writing) a book in the first place. It evokes a vision of the world in which pushing a button can create concrete, well-understood and—most importantly—accurate or undoubtable answers.

So what’s going on here? I think all of these examples sprout from the same root. That root is a fundamental misunderstanding of the subjective substance of media, driven by a way of looking at the world that understands it as information or believes that it can be fully described using information. To put it more coarsely, the world as data. Luciano Floridi, in The Philosophy of Information, calls this “digital ontology,” a belief that operates at various levels of detail to understand the universe as being either directly computable or itself literally a computer. I think this way of seeing the world has seeped into the discourse around technological progress for a few reasons.

The first and most obvious is that technology is itself highly systematic. Computers have no capacity for subjective interpretation. Code is literal; binary is 1 or 0. The fact that code can allow us to extend our capabilities, accomplish new kinds of tasks, and interpret information leads us to—incorrectly—extrapolate its power to include the entire human experience, including aesthetic experience and subjective interpretation of art.

More importantly, this way of thinking provides an avenue for something irresistible: the ability to transcend error by transcending subjectivity itself and reaching an invulnerable, perfectly calculable state of being that could only be afforded to us by such a highly systematic invention. The implications of this are broad: from individual intellectual comfort (or complete philosophical abdication) to the infinite predictable growth promised (demanded) by capitalism.

And finally, on a related note, some of the people who interpret media this way to prove how great this incarnation of “AI” is doing have an interest in doing so. One of the examples I cited was specifically promoting an individual AI model. This third category of motivation reminds me a lot of the NFT days, when broad transformational activity was promised in exchange for a few JPEG downloads. That movement, too, often fell into the trap of completely misunderstanding why art is made or what makes it “valuable” to people for definitions of “value” outside of money.

As we continue to figure out the best uses for any new technology, I think it’s important to resist these impulses and to see how it can best enhance subjective experience and help make meaning rather than replace it with something that can comfortably be measured.

Like design itself, the things we create have subjective impact whether we’re paying attention to it or not. Only when we pay attention can we put it to good ends.