[Editor's note: This post was originally published in 2015 as I was beginning to explore practical ideas for how to make individualized design a reality. In the intervening years, this sentiment has begun to show up in design systems throughout the industry, while the radically comprehensive mode of adaptation still lies ahead.]

Design shouldn’t just adapt to screen size.

Context isn’t all about adding more information.

These two ideas are the basis for a far-reaching design exploration that — I hope — will spur further exploration into mutative design.

In August, I spoke with a talented developer and designer, Jae Bales, about an app she was working on to help children learn to code using basic concepts and a simple drag-and-drop UI. We discussed possible ways of ensuring that the app could appeal to and be useful for children from 3 to 13 while still seeming appropriate. There was a spark here — could the app change design or copy based on the user’s age, changing as the user ages to keep them engaged?

On October 1st, I published the third episode of Design Notes, a podcast I started in collaboration with developers and designers to gather insights into the design process through the lens of their own work. In the episode, I talked to Roman Nurik, a design advocate and developer who works at Google. We covered many things, but one topic in particular stuck with me, and it was one we had touched on before: the idea that, in the future, interfaces and experiences won’t just change based on screen size or density, but will change based on real-world factors specific to the user. There it was again. Can an experience change based on the individual user? Should it? How would this work?

The challenge is a fascinating one. How is it possible to design something that accommodates such a wide, seemingly endless expanse of use cases? Does it even make sense to attempt that? Is there some new methodology that we can build up around this to make it possible? Are there any pieces at all that are possible today, without ambiguously putting this burden on “machine learning” and calling it a day?

This post will seek to take a first step into mutative design. We won’t answer every question or solve every problem here, but hopefully we’ll get down a good foundation for conversation that can keep the nascent methodology moving forward.

Does this make sense?

So first, before we go any further, does this actually make sense to pursue? Would mutative design be interesting or at least useful to real people?

Since you’re reading a post about it that didn’t already end, my answer is probably obvious, but I think the answer is “absolutely.” This is the sort of direction I predict interface design will take in the future — one that adapts to users intimately, and provides what they need not just in terms of content but in terms of interaction and experience. I believe this for a few reasons.

Primarily, the current way just doesn’t feel stable or sustainable. Right now, interfaces — even based on research and data — are built for averages. Users are averaged out into a few personas for whom the interface accounts, even if the product has millions of users. Certainly we can do better and build a system that can account for 100, 1000, or 1million personas or more. In the current system, continued post-launch data can inform future design decisions, but the gaps are too big.

Besides these gaps, the system of designing for averages often imparts nearly unsolvable design problems. When designing for averages, accessibility is too often left behind or partially implemented, because accommodating every point of accessibility would sacrifice other aspects of the design. Why can’t we design for sets of individual scenarios and let the interface mutate between those for more specific cases, producing a no-compromise design for every single user?

Second, there are many people in the world who don’t have access to mobile or internet-connected devices right now but who will soon, and there are people who are just getting access for the first time, or who are getting exposed to these technologies for the first time.

As designers we have the privilege of building our work on a legacy of existing interaction paradigms that users have somehow kept up with, but relying on that legacy excludes those who missed out on the first chapters of the digital interface conversation.

We must account for all of this, and mutative design could be the solution.

In a way, I would consider Phoebe a “moonshot” idea. It’s not realistic to expect 100% execution now, but there are pieces we can solve. I believe it would represent a 10x improvement on designing apps for everyone, and more research can be done to establish some practices around the idea.

A new methodology

“You don’t square up to every weed in this field with a righteous fight; you change something in the soil.” — Keller Easterling at SPAN 2015

I began thinking of this approach as “mutative design,” a design method that accounts for the interface and experience mutating on its own, perhaps imperceptibly, perhaps evolving with great leaps, but changing in a potentially unquantifiable number of ways according to the user’s needs and actions.

The easiest comparison I could make was to video games — I grew up playing Gameboy games like Pokemon and remember playing Tomb Raider on the PS1 for the first time and being amazed that the character could move in three dimensions. How is this possible, I thought. Whoever made this can’t have possibly coded every possible location and position into the game, right?

At 8 years old I had a hilariously lacking perception of how video games were made, but the feeling here is the same. To achieve a design that is truly able to change in this many ways, we need a new methodology. The same way developers decided how Lara Croft looks when she runs, jumps, uses key objects, pushes blocks, etc. we must decide — for example — how touch targets look when a user is color-blind, low-vision, a child, disabled, etc. and let the design structure move fluidly between changeable states in the same way.

Fundamental structure

The easiest way I found to think about the basic underlying structure of mutative design was a grid of dots. Each dot would represent a potential mutation state taking into account all the dots from all the rows connected to it in the rows above it.

So we need to begin with a top row of “starter states.” This is a crucial part of the grid because it’s where we learn physical or fundamentally factual characteristics about the user or their environment. Things that would change the way the user is using their device when they begin using a specific app which would impact how they’re using every app.

That said, this is only the first mutation, which greets the user when they first open an app. And many of these characteristics (as we’ll discuss shortly) can and should be learned by the system. Once we move to the next row of dots, the fun really begins.

For this exercise I’ve come up with a manageable list of characteristics, with letter codes for later.

- Age (A)

- Exposure to/experience with technology (E)

- Vision level/type (V)

- Physical ability (P)

- Language (L)

- Data availability (D)

- Lighting (S)

We can then break these factors out into potential variant states.

- Age: Infant, Toddler, Child, Adult, Elder

- Vision: Sighted, Low vision, Blind

- Vision B: Colorblind (Deuteranomaly, Protanomaly, Tritanomaly), Not colorblind

- Tech exposure: New, Familiar, Experienced, Power user

- Physical ability: Limited input, Voice only, Touch only, Visual manipulation, Unlimited input

- Language: Localization, RTL, LTR

- Data availability: No data, low data, full data

- Lighting: Darkness, normal lighting, bright lighting

Beyond this there could be ephemeral states that account for certain extracharacteristic use cases, like what happens if the user is riding a bike, has wet hands, or isn’t within eyesight of the device. These states wouldn’t have long-lasting mutative effects, but would serve their purpose in the moment.

Taking into account the starter state, we mutate to and between new states based on new learnings from the user. It goes on like this for the lifespan of the app. Sometimes the interface won’t need to mutate beyond starter states, sometimes the user could have a long and winding journey. Either way, mutative design should accommodate.

The experience should move seamlessly between these states, but the actual dots themselves are good opportunities to explore and crystalize the interface for specific cases.

Meet the user

So back to potential “starter state” conditions, how do we figure out what starter state the user is in?

A lot of the items on our list can actually be learned from the user’s system configuration. In an ideal world, the system itself would be able to learn these starter states without even asking, but the specific road to that achievement is outside the scope of this post.

Things like age and language would be determined by the user’s device account (be it Google or otherwise). Vision level and type would be determined by accessibility settings at the system level (though more detailed tracking could certainly be done beyond explicit settings). Data availability and lighting would come from the device’s sensors and radios.

Things like physical ability, though, could be determined silently by the system. Ideally the system (whatever theoretical system or OS we’re talking about) would include a framework for detecting these characteristics, and individual apps would accept these cues that would inform the interface and experience according to what elements and layouts the app actually used.

Invisible calibration

One way to invisibly calibrate the interface would be a first-run process. We see first run flows in many apps (check out UX Archive for plenty of examples) — they help the user get acquainted to a new product, introduce features, and guide the user through any initial housekeeping tasks that need to be done before starting.

But even while doing this, we can secretly determine things about finger articulation, physical accuracy, and perhaps even things like precise vision level and literacy.

One example would be adaptive touch targets.

The normal touch target size for Android is about 48dp (assumed to be the size of a human fingertip — that’s 144px on a device with a density of around 480dpi) but that’s the actual touch target size, not the visual size.

So for a normal toolbar icon, there would be about a 20dp visual size because it’s what the user can see, but when the user touches that icon it could be anywhere in a 48dp box.

A first run process (like initial device setup) could theoretically pay attention to acuity when it comes to touch targets by measuring distance from visual target and nesting actual targets around the visual target in expanding radii representing lower accuracy as the targets expand in size.

This information combined with age could give us a pretty clear idea of how big touch targets need to be in the actual interface for this particular user, but touch targets could still mutate within the design as the user goes for example from toddler to child, if we see that their accuracy is improving.

This is just one invisible way we meet the user.

Fundamental doubt

Interface designers have probably already spotted this — the fundamental doubt in this approach. The potential problem is two-fold.

User confidence

First, user confidence.

…the interface shouldn’t “pull the rug out” from underneath the user…

It’s important that users feel comfortable in an interface, and feel like they know how to use it and what to expect. Likewise, the user should ideally be able to hand off their device to someone else without that second user feeling overwhelmed. How then, if the experience is mutative, can we foster that feeling?

This doubt has to be addressed before we prepare the canvas with a practical app example.

The answer to this question is perhaps too vague, but it boils down to two steps. First, the interface shouldn’t “pull the rug out” from underneath the user in one big leap. In other words no mutation should see the interface lose an arm and grow three more eyes — mutations should happen at a steady, organic pace wherever possible. This, again, is why the starter state is so important — it will get the user to an initial comfortable state immediately, and further smaller mutations would happen from there.

The second step is simply maintaining a strong consistency in visual and interaction patterns. Within the app, and aware of its possible mutations, keep an internally consistent and intentionally brief dictionary of pieces that the user will still be able to recognize no matter what ends up happening. This means everything from typography to basic button styles.

Supporting infinite characteristics?

And here’s the second problem: just how many characteristics should we measure? If we aren’t careful, it’s easy to fall into the trap of choosing which characteristics we’ll support based on who we think will use the app. That lands us right back at the problem we’re trying to solve.

I think in the short term we can’t escape establishing some set of best practices for these considerations. Defining a set of characteristics that are agreed to be representative of most possible variations in how users will use and experience a device. In the long term? I will shove this off to machine learning — once we have a sufficient amount of data I think it would be reasonable to assume that characteristics could be recognized, learned, and accounted for by machines, with the list of characteristics continuing to grow as the system meets more types of users.

Deciding to mutate

Once we’ve established starter states, the app could continue to change its interface in subtle ways — for instance in a contacts app, providing quick action icons based on the most common means of communication with certain contacts — but relative to our goals, that’s easy. What we’re really focused on is staying in tune with the user’s real characteristics, those aspects that would change how they interact with the device on a level surpassing app functionality.

Let’s say the user has a degenerative vision condition. How would that play out behind the scenes in a mutative app? Let’s think through a very basic flow where the app would decide to expand a visual target or increase color contrast based on ongoing user behavior.

Some cases aren’t that clear, though. For example, what if the user is consistently hitting slightly to the top right of the visual target? We could move the visual target slightly up and to the right, but should we?

After all, if this is a problem of perception and not of physical acuity, then moving the target may cause the user to keep tapping up and to the right, on a trail leading right off the edge of the canvas. Alternatively, does it even matter that the touches are inaccurate? I would argue that it does, because other areas of the interface directly surrounding the visual target may be targets themselves, or may become targets after later mutations.

In this case, the app could subtly try two different approaches — one suited for perception, and one for physical acuity. Try moving the visual target just a bit within reasonable bounds (meaning you don’t necessarily have to scoot a button on top of some other element). If the user’s success rate isn’t improved (or they keep tapping up and to the right still), enlarge the visual target. If the success rate evens out, perhaps it’s okay for the button to slowly scoot back to its spot.

As Project Phoebe continues with more research and conversation, we can hope to create a set of best practices for problems like these, but for now we should be open to trying many theoretical approaches and figuring out the technical details once those are settled.

Preparing the canvas

So, knowing the possible “starter states” for a mutative design, and advancing confidently in the direction of our mutative dreams, we need something to design so we can keep exploring the approach.

We’ll walk through two examples in this post, named Asteria and Leto. One app, Asteria, is a contacts app and the other, Leto, is a launcher.

It makes sense to start each exploration with a pair of “starter states,” so we can see how they compare and how they might mutate on two different paths.

To make this exploration more interesting, I wanted to avoid choosing the starter states myself, so I randomized numbers for each variable, corresponding to a particular value of that variable. So for example Age has values 1 through 5, where a randomly-chosen A5 would mean an elder user, and A1 would mean an infant.

Hopefully this randomization will help to illustrate that mutative design should work for any user.

Example 1: Asteria

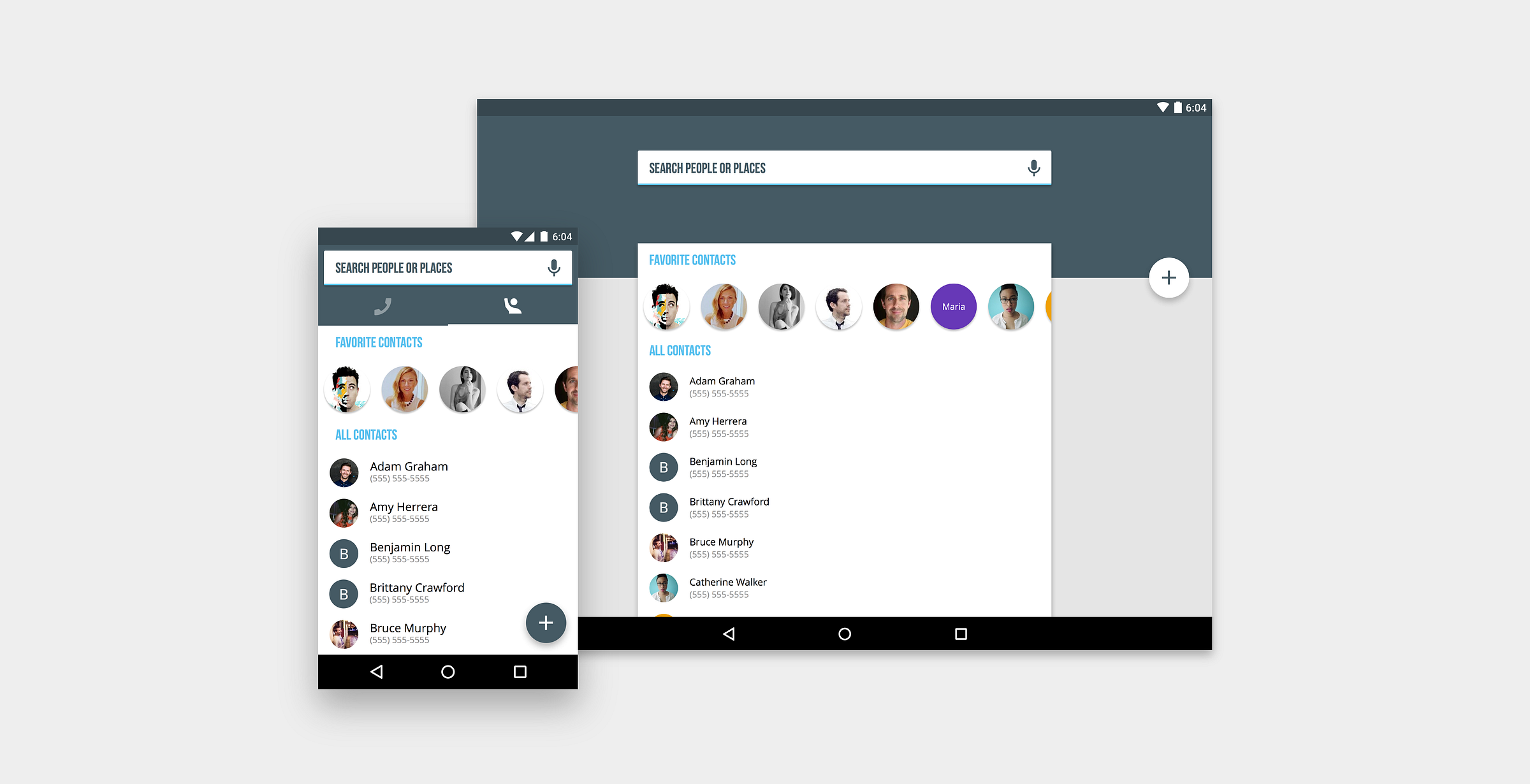

The image above shows Asteria’s “mother design” for the contacts tab. Basically, this screen displays a search box for finding contacts/businesses/etc., a tab bar for accessing the dialer, a sliding selection of frecents, and then an alphabetical scrolling list of contacts, and a floating action button for adding a new contact. On tablets where calling isn’t possible (either because there’s no data connectivity or no WiFi calling) the dialer tab will disappear.

Nothing immediately jumps out as crazy or out-of-this-world about the mother design, but it’s worth noting that this design may be a rare sight for the user population. If all goes according to plan, Asteria will undergo its first mutation (however mild it may be) before you ever see the interface.

The mother design exists as kind of an ideal archetypal state of existence, and will be rarely spotted in practice but very important in theory. It will serve as an origin point or “mother” for all the following mutations. Any special considerations for the product should be determined for the mother design, and product-motivated actions or features shouldn’t mutate out of the UI, but we’ll have to be open to those features having a life of their own. If all goes according to plan, those features will be accessible to a greater number of people under mutative design.

The mother design, then, should accept statistics and user research in the same way that most interface designs do today. The difference is that we will no longer accept this as a good stopping point — it’s just a strong foundation for future mutation.

Starter state 1

Let’s begin with user 1: A3E3V2B2P5D3. That means our user is an Adult, familiar with technology, low vision, not colorblind, with unlimited physical input and full data. For the sake of the example let’s assume the user reads English. The lighting condition will change as the user moves through their day.

In State 1, the only factor that will make an appreciable change to Asteria’s experience is the user’s vision. As stated before, this probably doesn’t need any special discovery by the app, since accessibility settings are at the system level. So Asteria would ideally find out from the system that the user is low vision, and make the first mutation by itself the first time the app runs.

But some accessibility features should have already been considered in the original design. For example, contrast. For normal text, the recommended text-background contrast is 3:1, or to account for low visual acuity, 4.5:1.

Black text on a white background has a ratio of 21:1, so we’re obviously in the clear there. Our bluegrey color with white has a ratio of 4.4:1 which is close, but not quite to the recommended level, and certainly not up to the 7:1 recommendation for “enhanced” contrast. So, knowing the user has low vision, we can make an adjustment to get us all the way to enhanced contrast. The text size would of course be accounted for by the user’s system preference.

What happens next? As discussed earlier, we could mutate further based on the user’s behavior. If the user frequently calls Amy Herrera for example, a phone shortcut icon could appear next to her contact entry. But that’s a pretty easy mutation that can already be accomplished today. So theoretically Asteria could stop mutating for user 1 right now, unless something changes in the future.

If we find that the user is lingering on this screen without tapping anything, or is swiping around aimlessly, the interface might surface labels to help them decide what to do, or point out important information. If the user is swiping up and down through contacts frequently, we can even highlight features like search. Once the user uses search a few times, the highlight can fade as we assume the user is now familiar with it. Here’s what that state would look like:

Starter state 2

The second case will be user 2: A2E3V1B2P5D1. So this user is a toddler who is familiar with technology, who is sighted, not colorblind, has unlimited input, and no data. Why would a toddler be using a contacts app, you ask? One perk of mutative design is that we don’t necessarily need a concrete answer to that question. We just need the infrastructure to react to this case.

For this user, the two factors most likely to impact our design considerations are that the user is a child, and that the device they’re using has no data connection (this may be temporary or permanent — we don’t know).

Notice that our randomized state says that the user, despite only being a few years old, is already “familiar” with technology. Still to be decided is how we would measure familiarity. Things like how many times the user backs out of an action (and how fast), how long they linger on a non-text-oriented screen (hunting for the right option), etc. could tell us this, and would measure “familiarity” relative to age. So “familiar” for a toddler would measure differently than the same characteristic for someone whose age group has a higher functioning average of “familiar” behavior.

That said, as UXMatters explained back in 2010, we shouldn’t automatically consider a design for children to be radically different at its core than a design for an adult. An important distinction to make, though, is that the post linked above dealt mainly with the web, assuming the user is on a computer.

Computers have a unifying input method that works the same for people of any age — a mouse. The mouse evens out the input by offering really great precision. Without that — on mobile devices, for example — we’re stuck with fingers. Fingers that could potentially be clumsy or not yet adapted to touch input.

According to my own anecdotal research (asking friends and colleagues who have toddlers), young children respond best to interactions that are clear and provide good feedback. This was perhaps the biggest takeaway from my highly informal survey.

Target size is also important, as toddlers might make fast, imprecise motions, and touch feedback on small targets will often be covered by the user’s hand.

I also learned that touch is actually an easier input method for young children to adapt to than something like mouse/cursor input. There’s a direct connection between the user’s hand and the interface, and the visible feedback mentioned earlier reinforces this connection immediately. This makes sense as — from the user perspective — fingers are a relatively uniform input method. A mouse adds at least one layer of abstraction, assuming the interface doesn’t take over scrolling mechanics or introduce other quirks.

Taking all of this into consideration, let’s take a look at our mutation for user 2.

From a static view, we can see that the FAB and its icon are larger and more pronounced against the rest of the interface, and the search action highlighted in the same light blue accent color that we’ve seen before, prioritizing visual information for a user who may not be able to read yet. The list of contacts has received new, larger spacing to account for imprecise touches as well. Finally, the search cue (though our user may not be able to read it) has been changed to reflect that the user has no data.

With new colors and highlights, we also have a chance to build on explicit, clear interactions that should have already been built into the app. The blue highlight color can wash over the search bar reinforcing the action the user is about to take. If our user is able to type, they will, but the search action could simultaneously listen for speech input if the user tries that.

Having a user that’s a young child is interesting, because it’s a case where the interface might actually mutate toward the mother design as the child grows, learning to read and better understand interface elements.

The list elements come back together as we notice the user’s accuracy improving, and exaggerated elements like the FAB and search action can subtly shift back toward the mother design.

Example 2: Leto

Above is the mother design for a hypothetical launcher called Leto. Since this is just a sketch for demonstration purposes, there are no doubt some unresolved design considerations, but here are the basics.

The launcher is comprised of three spaces — apps, widgets, and the hot-seat. On phone, these condense into two screens. On tablet they’re side by side. Apps are organized alphabetically, with user-created or automatic folders living up top.

At the bottom is a hot-seat for quick access to commonly used or favorite apps, and a button to — in this case — expand the Google Now stream from the existing sheet-like element. For this example I tried to keep the concept as simple as possible, but with some embellishments like the light-mode nav bar and status bar (the latter changing with the user’s wallpaper selection).

Since the launcher is a grid, its content is ripe for seamless mutations related to information density, target size, etc. Its modular structure naturally lends itself to adding, removing, and altering individual pieces — another win for grids!

But let’s get down to business — who are our sample users, and what are their starter states?

Starter state 1

User 1 is A4E3V2B2P3D3, meaning the user is an adult who is experienced with tech, has low vision, is not color blind, has touch-only input, and full data. For interfaces that do not involve voice input explicitly, touch-only will equate to unlimited input.

We already saw how Asteria mutated for a low-vision starter state, but a launcher like Leto is a somewhat different challenge. After all, outside of a launcher we generally don’t have to content with something as wide open as a user’s wallpaper selection. The wallpaper could be anything, including a blank white field, but icon labels must remain legible no matter what. The hot seat and nav icons are already safe thanks to the expanding card at the bottom.

For this example I’ve used one of my own illustrations as a wallpaper to give a good sample of texture and color variation that might occur with a user-selected wallpaper.

In this variation, the grid has been expanded to accommodate larger icons and larger labels, along with deeper, darker drop shadows to give the text labels some protection. The indicators in the hot seat are also darker.

This variation increases visibility and contrast quite a bit, but it may not be enough. If we find that the user is still having trouble finding or accurately tapping on an item, we can apply a gentle 12% scrim over the wallpaper, while simultaneously inverting the statusbar like so:

Starter state 2

On to user 2: A4E1V1B2P5D2. This user is an adult who is new or unfamiliar with digital interfaces, who is sighted, not colorblind, has unlimited input, and low data.

This is an interesting case, especially for a launcher. The immediate (and easiest) solution is to turn to a first-run process. After all, a launcher is a complicated experience, simple though it may seem.

There are a lot of elements to know about, even in Leto. App icons, folders, widgets, the hot-seat, the G button, how to create folders, how to add widgets, how to remove apps, widgets, or folders, how to rename a folder, how to change the wallpaper, and any additional settings we may build in.

But while it’s easy to fall back on an explicit page-by-page instruction booklet for the user, there’s a better way. All of this learning doesn’t have to happen all at once, and I would argue it makes sense for the user to experience learning the launcher’s features over time in a way that’s organic and nearly invisible. Probably this goes for any interface, not just launchers.

The launcher on phones gives users an exposed “new folder” mechanic to get them used to dragging apps up to the folder space, and the “dots” indicators on the hot-seat transform to a different kind of indicator that will hopefully prompt a swipe over to the widget space. We can see the widget space on tablet, prompting the user to either add a widget or dismiss the space for now.

Obviously an app could implement a kind of in-line educational process like this today, but the trick — the thing that’s relevant to our discussion — is in the possibility of learning whether or not the user needs this process in the first place.

For this case, the user does need it, but more experienced users would just dive in and understand the launcher based on their understanding of other interfaces.

This is something that would again be handled by the system’s own setup process, measuring invisible things like how users behave with buttons, whether they appear comfortable with swiping, touching, holding, how long it takes to read through some instructions, how and when the back action is used, etc. It could even be the case that the user only needs part of these instructions. Maybe they are comfortable with scrolling through a grid when some of the grid’s content is hidden, but aren’t quite adept at manipulating items in that grid yet.

For the second transformation we’ll invent some fiction — the user has dismissed the widget space for now, but has become accustomed to creating folders and maneuvering through the grid. In this case, the launcher would exactly match the mother state, and the user could add widgets as usual if they decide to.

What’s next?

Now that we’re reaching the end of this first exploration, what happens next?

The conversation about mutative design is just getting started. My hope is that this post will encourage designers and developers to think about, research, explore, and discuss the possibilities of mutative design, ultimately working toward making this new methodology into something real that can help craft interfaces that work for every user.

To that end, the design resources from Project Phoebe are now open source. You can find my original Sketch files linked on Github below, along with the fonts I used.

If you want to keep the conversation going, check out the community below on Google+.

Your turn

Design source files: source.phoebe.xyz

Fonts: Bebas Neue, Open Sans

Faces: UIFaces.com

Let’s talk

Community: Mutative Design on Google+

#mutatemore #mutativedesign wherever hashtags are accepted